How Can We Decide Which Variable Selection We Will Use

In the Stepwise regression technique we start fitting the model with each individual predictor and see which one has the lowest p-value. Read 1 answer by scientists to the question asked by Akbar Yoosefvand on Mar 3 2020.

How To Choose A Feature Selection Method For Machine Learning

Then they determine whether the observed data fall outside of the range of values.

. 1 all possible subsets best subsets analysis 2 backward elimination 3 forward selection and 4 Stepwise selectionregression. Variable Selection in Multiple Regression. Various metrics and algorithms can help you determine which independent variables to include in your regression equation.

Disadvantage depending on how you look at it Given 3 collinear variables LASSO will select one and zero out the other two. Cross validation as Nick Sabbe discusses penalized methods Dikran Marsupial or choosing variables based on prior theory Michelle are all options. To understand why it is so potentially fraught it may help to read my answer here.

You want S to be smaller because it indicates that the data points are closer to the fitted line. Variables lwt race ptd and ht are found to be statistically significant at conventional level. But note that variable selection is intrinsically a very difficult task.

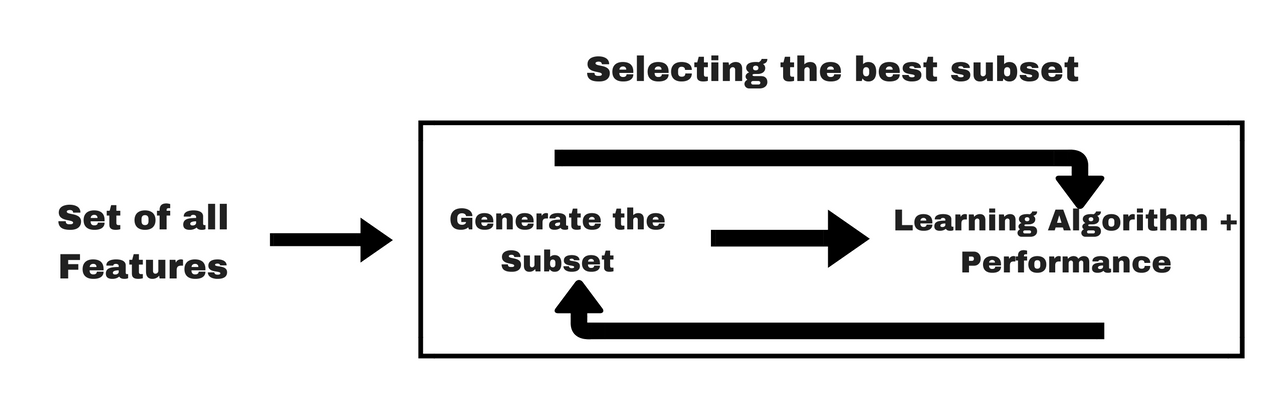

As you can see in the output all variables except low are included in the logistic regression model. Continue until a stopping rule is reached. Filter-based feature selection methods use statistical measures to score the correlation or dependence between input variables that can be filtered to choose the most relevant features.

Statistics decide which variables should be included in a model is popular among scientists. Determine whether a predictor variable has a statistically significant relationship with an outcome variable. In many situations in model building variable selection is useful for improving predictions or for minimizing the number of variables and for other purposes such as reducing costs.

Start with all variables in the model. Mon misconceptions often lead to inappropriate application of variable selection. Jan 25 2012 at 2339.

Next we move the result to a new Dataframe. 1 List and count the n variables in the problem. Remove the variable with the largest p-value that is the variable that is the least statistically significant.

Or in other words how much variance in a continuous dependent variable is explained by a set of predictors. The basis of a multiple linear regression is to assess whether one continuous dependent variable can be predicted from a set of independent or predictor variables. We could use the individual p -values and refit the model with only significant terms.

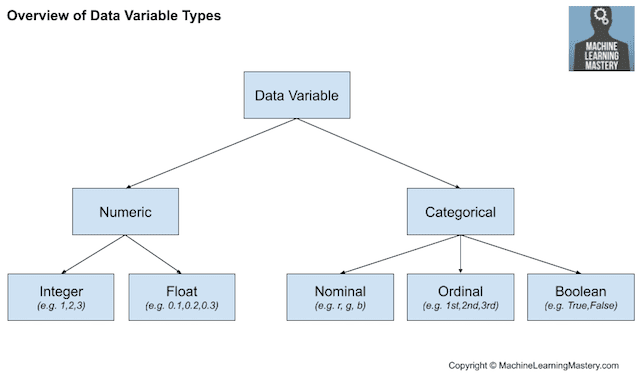

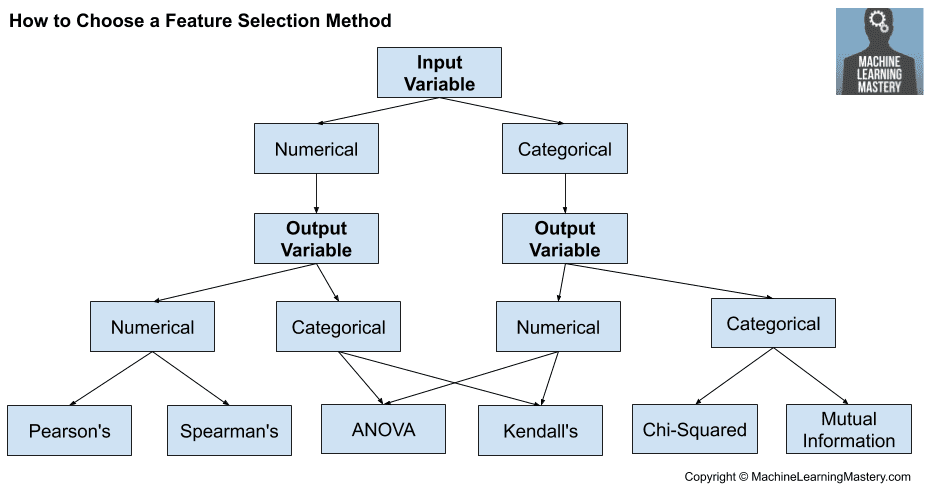

Full model can be denoted by using symbol on the right hand side of formula. Fs SelectKBestscore_funcchi2 kall fsfitX_train y_train X_train_fs fstransformX_train X_test_fs fstransformX_test We can then print the scores for each variable largest is better and plot the scores for each variable as a bar graph to get an idea of how many features we should select. Statistical measures for feature selection must be carefully chosen based on the data type of the input variable and the output or response variable.

Result pdDataFrame result diagnosis datailoc 0 Creating a Dataframe with the. The new p - 1-variable model is t and the variable with the largest p-value is removed. Then pick that variable and then fit the model using two variable one which we already selected in the previous step and taking one by one all remaining ones.

Using the study and the data we introduce four methods for variable selection. This gives us n 5 variables. The first is a matrix or data frame of the explanatory variables the second is the threshold value to use for retaining variables and the third is a logical argument indicating if text output is.

We can begin with the full model. Allows p n but will only include up to n variables Is good at getting rid of zeroing non-useful variables. We emphasize that variable selection and all problems related with it can often be avoided by the use of expert knowledge.

When we fit a multiple regression model we use the p -value in the ANOVA table to determine whether the model as a whole is significant. The nonlinear model provides a better fit because it is both unbiased and produces smaller residuals. Estimate the difference between two or more groups.

The function uses three arguments. 2 List the dimensions of each variable according to their fundamental dimensions. Here we have a lift force F speed of the aircraft V sound speed of air c aircraft wing area A and freestream density rho.

Variable Selection deals with one of the most difficult problems in chemometrics selecting variables for regression and classification. They can be used to. In VBA we can select any range of cells or a group of cells and perform different set of operations on them selection is a range object so we use range method to select the cells as it identifies the cells and the code to select the cells is Select command the syntax to use for selection is range A1B2select.

You can use various model selection statistics that can help you decide on the best regression model. For the linear model S is 725 while for the nonlinear model it is 137. Some people prefer to see the variables share the effect.

Statistical tests assume a null hypothesis of no relationship or no difference between groups. Certain regression selection approaches are helpful in testing predictors thereby increasing the efficiency of. Among all 89 clini-.

A natural next question to ask is which predictors among a larger set of all potential predictors are important. Is that mean we should use same control variables from previous literature or we can use the most suitable variables. If the p values is higher than the threshold we discard that combination of features.

How To Choose A Feature Selection Method For Machine Learning

How To Choose A Feature Selection Method For Machine Learning

Comments

Post a Comment